In the era of Semantic SEO, where Google prioritizes meaning, context, and topical authority, a technical yet invisible factor plays a decisive role in performance:

Cost of Retrieval (CoR) – the computational cost Google incurs to crawl, parse, and index your web content.

Search engines are businesses, not just tools. Every crawl, every index, every render has a cost.

If your content increases that cost—because of duplication, poor structure, or bloated pages—you lose visibility.

This lesson dives deep into:

- What Cost of Retrieval means

- How it shapes crawl budget and indexing

- How to reduce it through technical SEO, canonicalization, and structured data

- How it relates to semantic optimization and topical maps

What is Cost of Retrieval?

Cost of Retrieval refers to the computational resources consumed by a search engine to:

- Crawl your page

- Render and analyze it

- Index it within the knowledge graph or search corpus

- Retrieve it quickly when relevant to a user query

Every web page is a unit of cost. High-effort, low-value pages waste resources.

ALSO READ …

- What is crawl budget in SEO

- How do search engines work

- What is semantic search

- How does Google rank articles

- Google helpful content guidelines

Thin content, duplicate pages, tag archives, 404s = high retrieval cost

Efficient internal linking, relevant schema, canonicalization = low retrieval cost

Think about a Website has 100 pages; within it 25 pages optimized and other 75 pages not good or useful. It looks:

- Thin content

- Duplication

- Technical

- Speed

- Crawling issue

Another website has 50 pages and all the pages are high quality with relevant content.

Which one would you like most?

Which website Google choose to love?

It’s like: Organized House – Website with High Quality Contents

Broken House: – Like low quality website.

Google’s Business Model and Retrieval Economics

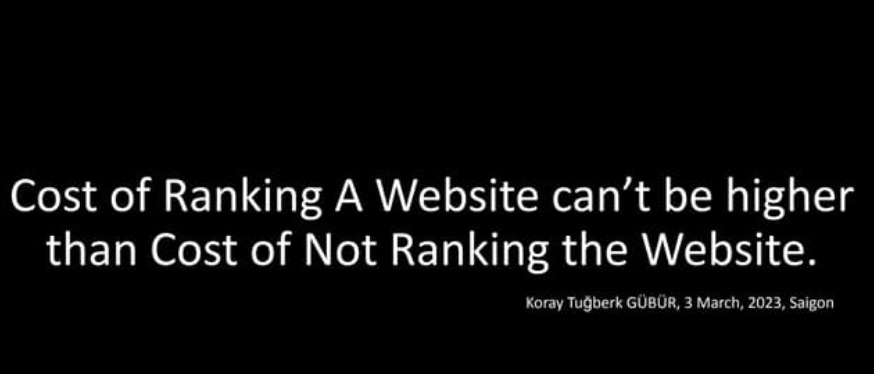

Google is a machine learning-powered database, not a public service. Every crawl is an expense. The lower the cost per valuable result, the more efficient the engine.

Low Cost = High Efficiency = Higher Ranking Potential

High Cost = Low Efficiency = Lower Crawl Priority

This directly ties into:

- Crawl Budget Allocation

- Indexing Prioritization

- SERP Placement and Visibility

Technical Breakdown: Crawl → Parse → Index → Retrieve

1. Crawling

- Robots visit the URLs on your website

- Crawl depth, frequency, and priority are determined by site structure and crawl budget

- Common problems: Orphan pages, unnecessary tag/feed archives, session URLs, and duplicate paths

2. Parsing

- HTML, JS, and structured data are parsed

- NLP algorithms scan entities, contextual relationships, and schema tags

- Poor markup or fragmented DOM trees increase parser load

3. Indexing

- Only useful, unique, and semantically relevant pages are indexed

- Google evaluates topical relevance, freshness, canonical signals, and semantic coverage

4. Retrieval

- When a query is issued, the index is scanned and scored

- Retrieval favors high-value, low-cost pages with clear semantic signals and fast rendering

How to Reduce Retrieval Cost: A Practical Framework

A. Site Structure & Navigation

- Limit depth to 2-3 clicks max

- Ensure clean internal linking to cornerstone pages

- Use breadcrumb navigation for crawl path clarity

B. Block Useless URLs

Use robots.txt and meta tags to block:

/tag/,/feed/,/?s=,/cart/,/thank-you/, session parameters- Archive and pagination paths that offer no semantic value

Disallow: /tag/

Disallow: /feed/

Disallow: /*?s=C. Canonical Tags

Avoid duplicate indexing:

- Use

rel="canonical"to consolidate ranking signals - Canonicalize across:

- HTTP vs HTTPS

- www vs non-www

- trailing slashes

- URL parameters

D. Use Noindex for Thin or Irrelevant Pages

Examples:

- Privacy Policy

- Terms & Conditions

- Empty search result pages

- Duplicate category/tag pages (esp. for blogs)

html

<meta name="robots" content="noindex, follow" />Advanced Strategies for Semantic Retrieval Optimization

Use Topical Maps

- Group semantically related content under thematic silos

- Link internally using contextual anchor text

- Reduces index scatter by clustering similar entities

Think of your site as a structured knowledge domain, not a pile of articles.

Without Topical Mapping: Unstructured

With Topical Map: Structured & Organized

Structured Data: JSON-LD, Schema Markup

- Use entity markup:

FAQPage,Article,Product,HowTo - Helps Google’s semantic parsers understand the content faster

- Reduces time-to-index by increasing relevance score

Improve Core Web Vitals & Mobile Optimization

- Faster pages = less render time = lower retrieval cost

- Mobile-first indexing prioritizes responsive design + fast UX

Tools to Monitor Retrieval Efficiency

| Tool | Use Case |

|---|---|

| 🧰 Google Search Console | Crawl stats, index status, page discovery |

| 🧰 Screaming Frog / Sitebulb | Crawl error audit, duplicate detection |

| 🧰 Log File Analyzer | See what bots are actually crawling |

| 🧰 Prerender.io | Server-side rendering support for JS-heavy sites |

| 🧰 PageSpeed Insights | Mobile speed + UX insights |

Real Impact: Retrieval Cost vs SEO Performance

| Page Type | Crawl Cost | SEO Value |

|---|---|---|

| High-quality blog post (with schema) | Low | High |

| Thin tag archive | High | Low |

| Product page with structured data | Low | High |

| Dynamic URL with session ID | High | Zero |

| Updated cornerstone article | Low | High |

More semantically enriched, topically relevant pages = better performance at lower retrieval cost

Retrieval Cost and Topical Authority

Topical authority reduces retrieval cost per page:

- Fewer ambiguous entities

- Fewer random jumps across themes

- More concentrated internal linking

- Better indexing-to-ranking conversion

A topical map is a semantic sitemap. It optimizes retrieval for machines.

Final Recommendations: Your Retrieval Optimization Checklist

- Block irrelevant pages in robots.txtSet canonical

- Tags on every important URLUse noindex on

- Legal and utility pagesImplement structured

- Data via JSON-LDKeep crawl depth ≤ 3

- ClicksCreate internal linking from high-authority

- PagesAudit thin or duplicate content

- MonthlyUse semantic clusters via topical

- MapsTrack GSC’s crawl stats and errors weekly

Conclusion: Semantic SEO Begins With Structural Precision

Semantic relevance alone is not enough.

Google is an economic engine—if your site wastes its resources, you will lose the semantic game.

- Structure precedes semantics.

- Retrieval efficiency is an SEO ranking signal.

- Semantic SEO must bridge content quality and technical optimization.

Coming in Part 12: What is Crawl Budget in SEO? How It Influences Semantic Indexing and Ranking Performance

Disclaimer: This [embedded] video is recorded in Bengali Language. You can watch with auto-generated English Subtitle (CC) by YouTube. It may have some errors in words and spelling. We are not accountable for it.